Panda 4.2 Released: History, FAQs & Thoughts

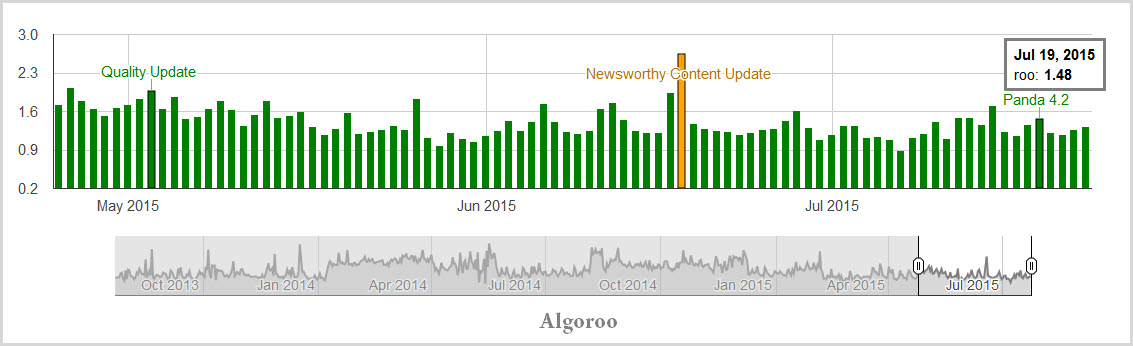

Posted On : July 23rd, 2015 By : Dillip Kumar Mohanty To : Google Algorithm, Google PANDABarry Schwartz has confirmed that Panda 4.2 started rolling out since last weekend (july 18th, 2015) in his post after almost 10 months of wait.

Information about Panda 4.2 so far:

- Confirmed by Google

- Started last weekend

- Rolling out slowly

- Will take months to fully roll out

- 2-3% of English queries affected

- Will impact your site slowly (I will explain why I put the last point in red)

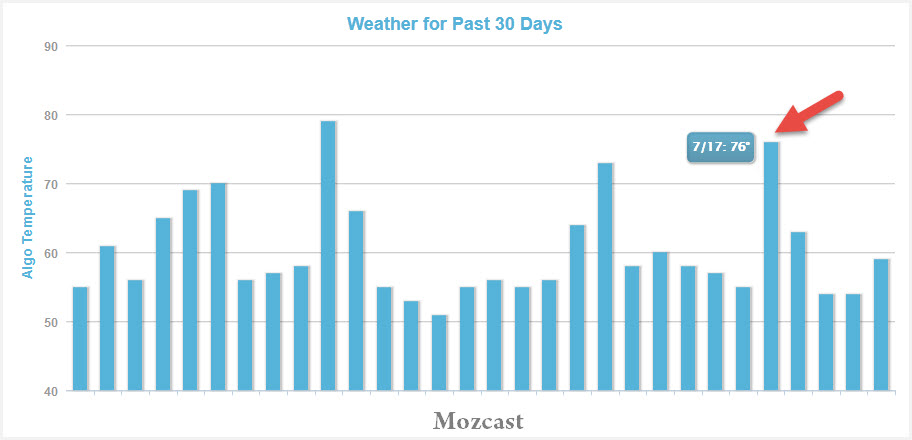

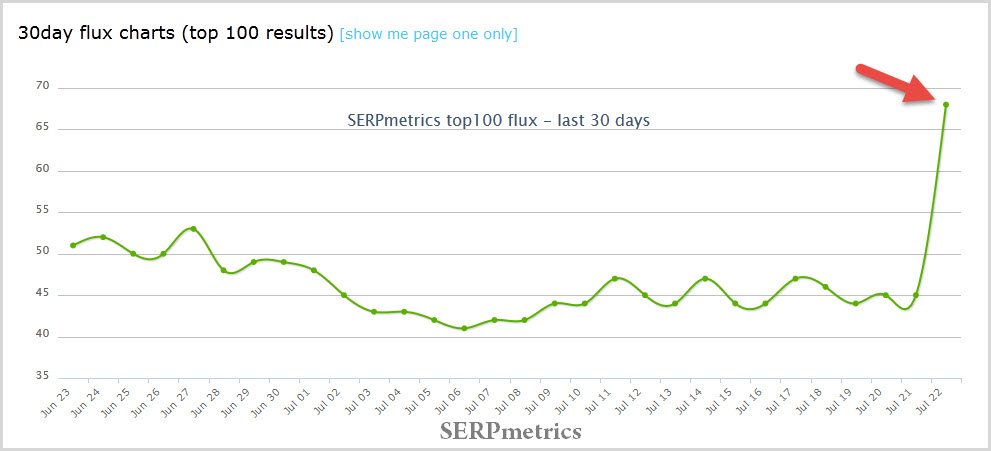

Though as per Barry’s report, the date was July 18th, let’s see what different Google SERP tracking tools are telling about the update.

Now different tools indicating different dates! That’s natural depending on the sets of keywords they are tracking and as per Google, the update is rolling slowly and only 2-3% English queries are impacted.

A Brief History of Panda Updates:

- Panda algorithm came into effect on Feb. 24, 2011 affecting 11.8% English queries in USA only.

- The sole aim was to differentiating between high quality sites with original & informative in-depth content to low quality sites having no value to users.

- It was rolled out internationally for English queries on April 11, 2011.

- There are so far 30 updates in this series including this latest 4.2 most of which are confirmed by Google.

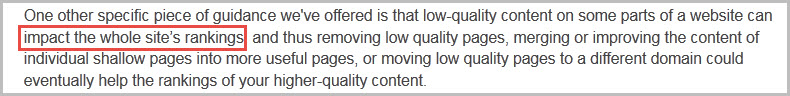

- It is a site-wide penalty means a site with enough low quality pages would be impacted as a whole including its high quality pages also.

FAQs & Thoughts on Panda Algorithm

How can I know that I’m impacted by Panda?

Now, this question has been discussed thousand times in different places and the common answer is that if you see huge traffic drop in your GA in that date, then be sure that you have been affected by Panda.

My site is a small one; less & inconsistent traffic on daily basis. How could I confirm that I’m affected by it?

That’s a critical one but yes you may draw some conclusions from your GA organic traffic data. Just compare the traffic data after the update date to its previous dates. If you find that yours every page has less traffic than previous period then that might be indicating that your site is affected by Panda.

Is that a confirmation?

No, it is not 100%. As Panda is a site-wide penalty, so the above method may be taken as a confirmation but we should keep in mind that Google has been tweaking its algorithm after every major updates. So, in many cases it is found that GA shows inconsistent traffic after major update. Glenn Gabe described this beautifully in his post as Panda tremors.

Is Panda real-time?

Now that is an interesting question and has been a topic of discussion & confusion since Mar, 2013 when for the first time Matt Cutts declared that Panda was being incorporated into their main algorithm. Again he declared in June same year that Panda would be rolled out monthly basis and would take 10 days for completion. That’s made the SEO community confused about the state of Panda.

Again this happened this year. Gary Illyes around March declared that Panda was real-time but John Mueller confirmed that no, it wasn’t not. Now such statements made the whole scenario blurred. But this 4.2 release is indicating that still Panda is not real-time. It is being acted upon intermittently. The trend remains the same; either they refresh the algorithm or add new signals and roll it out in different periods.

If this is not real-time then why some sites have seen that they recovered from Panda penalty though there was no confirmation by Google about Panda around that time?

Panda algorithm is really a complex one due to its inherent ability to test the quality factors of sites. Now, that is really too difficult as quality is a subjective issue. It can’t be quantified by a bunch of signals drawn by a machine. So, to do this Google must have using numerous signals to assess the quality level out of which some may be showing positive result separating high quality sites from low quality ones. It might be possible that after confirmation, these signals might be incorporated to their core algorithm. When such incorporation happened then the sites that were affected by those signals and later on rectified their issues might see recovery in that time. Whereas those sites that were affected by other signals might not see recovery and hence the confusion started about possible Panda update.

Is it confirmed that Panda is a site-wide algorithm?

Yes, it is confirmed. Amit Singhal has written an in-depth post after the first Panda release where he clearly mentioned that some low quality pages would affect the whole site’s ranking equally.

How much content should I place in my page?

This is really a piece of common misconception about Panda algorithm! Many say that in-depth & lengthy content is Panda-friendly. By this they assume that here content refers to texts only. Panda is being there for high quality sites that are providing quality information and relevant data in less time. So, I believe it is all about your capability to provide information in right manner so that a user would get all the necessary information without much effort. So, content length is not a big matter. What matters most is how you represent the relevant information. If the query needs a few lines to be answered satisfactorily then you need not write a 2000 word article. Even in some cases, you can represent the relevant content through tables, images etc.

So, you shouldn’t focus on keywords rather FOCUS ON KEY THEMES.

Queries that need in-depth information should answered with lengthy content with unique information to be represented in such a manner that a user would like to go through each part of the content from top to bottom.

I’ve several pages with unique content but short ones. Is that an issue?

No, if all pages refer to different queries, then that’s fine. But if all queries are inter-related, you can place them in a single page like that in a FAQ page. Even in that case, it would be easier for Google to crawl and index them.

I’m having a small & less-known site. How could I be authoritative?

Authority doesn’t necessarily mean a well-known site. But produce content like an authority site in your niche. Be specific to the need of your audience. Write unique content with facts, figures and link back to authority sites so that users and bots will know that your content is matched with those authority sites. In that way a user may conclude that whatever you are writing are based on established facts and would like to come back to your site more frequently. But never copy from those sites. Just link to them for confirmation of you facts not for in-depth information.

How can I avoid thin and duplicate content issues?

Well Dr. Peter J. Meyers from Moz has already covered these things in one of his in-depth articles. Well, duplicacy doesn’t necessarily mean to place the same information that would be found in other sites. Same information in exact manner is though a clear case of duplication. Suppose you’re a local site selling products. Then such sites in your locality selling the same products may be using the same information like yours. That is natural and you can’t avoid that. Now Google is not going to put every such site in Panda. You need unique, to be different in providing help to users. That’s the secret.

Is Panda all about content?

No, Google Panda algorithm is all about quality. Content is just a big part. It is about the authenticity of your information, users experience and presentation. So, don’t just focus on content and quantity. Focus should be on quality content, users experience, wise interlinking; avoiding issues like above the fold issue, too much ads, lengthy and unnecessary content, duplicate or nearly duplicate contents within the site or with other sites etc. Think and only think about users; not about search engines.

What does it mean that this Panda 4.2 would affect sites slowly?

As I’ve mentioned above, Panda consists of numerous signals. Suppose all signals if simultaneously rolled out are sending mixed results not providing the results as expected. In that case, Google may try to evaluate newer signals first and then the older and more established ones later on. Even in this way they can test how much weightage to be assigned to each signal. May be they are trying to roll out the common signals for all sites, all niches and then they would try to roll out the niche specific or local specific signals. Now all of these are pure speculation. In coming days I hope experts would clarify better what exactly it mean. Till then finger crossed.

- Suvaance 10th Anniversary Celebration - June 8, 2022

- Video Optimization For Google Search: Everything You Need To Know - April 6, 2022

- Impact of Reviews on Ranking & How to Deal with Negative Reviews? - November 7, 2021